Trinity-RFT: A General-Purpose and Unified Framework for

Reinforcement Fine-Tuning of Large Language Models

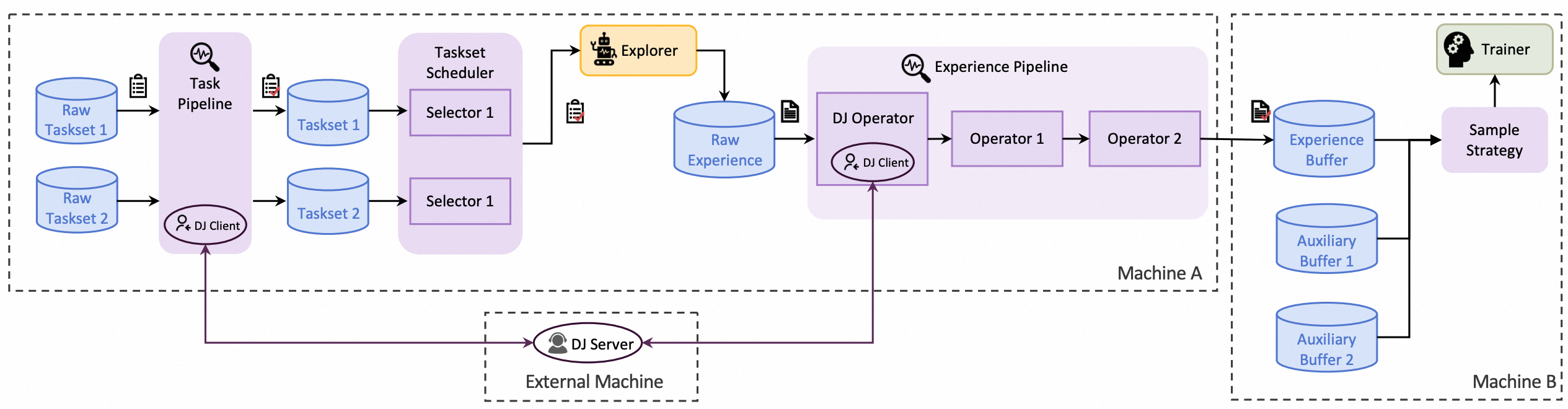

Trinity-RFT is a general-purpose, flexible and user-friendly framework for LLM reinforcement fine-tuning (RFT). It decouples RFT into three components that work in coordination:

-

Explorer generates experience data via agent-environment interaction;

-

Trainer updates model weights by minimizing losses on the data;

-

Buffer pipelines data processing throughout the RFT lifecycle.

Trinity-RFT provides functionalities for users with different backgrounds and objectives:

-

🤖 Agent application developers: Train LLM-powered agents and improve their capabilities in specific domains [tutorial]

-

🧠 Reinforcement learning researchers: Design, implement and validate new RL algorithms using compact, plug-and-play modules that allow non-invasive customization [tutorial]

-

📊 Data engineers: Create RFT datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios [tutorial]

| Category | Tutorial / Guideline |

|---|---|

| Run diverse RFT modes | + Quick start: GRPO on GSM8k + Off-policy RFT + Fully asynchronous RFT + Offline learning by DPO or SFT |

| Multi-step agentic RL | + Concatenated multi-turn workflow + General multi-step workflow + ReAct workflow with an agent framework + Example: train a web-search agent |

| Full-lifecycle data pipelines | + Rollout task mixing and selection + Online task curriculum (📝 paper) + Research project: learn-to-ask (📝 paper) + Experience replay with prioritization + Advanced data processing & human-in-the-loop |

| Algorithm development | + RL algorithm development with Trinity-RFT (📝 paper) + Research project: group-relative REINFORCE (📝 paper) + Non-verifiable domains: RULER, trainable RULER, rubric-as-reward |

| Going deeper into Trinity-RFT | + Full configurations + Benchmark toolkit for quick verification and experimentation + Understand the coordination between explorer and trainer |

Note

For more tutorials, please refer to the Trinity-RFT documentation.

-

Flexible RFT Modes:

- Supports synchronous/asynchronous, on-policy/off-policy, and online/offline RL.

- Rollout and training can run separately and scale independently across devices.

- Boost sample and time efficiency by experience replay.

-

Agentic RL Support:

- Supports both concatenated and general multi-step agentic workflows.

- Able to directly train agent applications developed using agent frameworks like AgentScope.

-

Full-Lifecycle Data Pipelines:

- Enables pipeline processing of rollout tasks and experience samples.

- Active data management (prioritization, cleaning, augmentation, etc.) throughout the RFT lifecycle.

- Native support for multi-task joint learning and online task curriculum construction.

-

User-Friendly Design:

- Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

- Rich graphical user interfaces enable low-code usage.

- [2025-11] Introducing Learn-to-Ask: a framework for training proactive dialogue agents from offline expert data (paper).

- [2025-11] Introducing BOTS: online RL task selection for efficient LLM fine-tuning (paper).

- [2025-11] [Release Notes] Trinity-RFT v0.3.2 released: bug fixes and advanced task selection & scheduling.

- [2025-10] [Release Notes] Trinity-RFT v0.3.1 released: multi-stage training support, improved agentic RL examples, LoRA support, debug mode and new RL algorithms.

- [2025-09] [Release Notes] Trinity-RFT v0.3.0 released: enhanced Buffer, FSDP2 & Megatron support, multi-modal models, and new RL algorithms/examples.

- [2025-08] Introducing CHORD: dynamic SFT + RL integration for advanced LLM fine-tuning (paper).

- [2025-08] [Release Notes] Trinity-RFT v0.2.1 released.

- [2025-07] [Release Notes] Trinity-RFT v0.2.0 released.

- [2025-07] Technical report (arXiv v2) updated with new features, examples, and experiments: link.

- [2025-06] [Release Notes] Trinity-RFT v0.1.1 released.

- [2025-05] [Release Notes] Trinity-RFT v0.1.0 released, plus technical report.

- [2025-04] Trinity-RFT open sourced.

Note

This project is currently under active development. Comments and suggestions are welcome!

Before installing, make sure your system meets the following requirements:

- Python: version 3.10 to 3.12 (inclusive)

- CUDA: version >= 12.6

- GPUs: at least 2 GPUs

If you plan to customize or contribute to Trinity-RFT, this is the best option.

git clone https://github.com/modelscope/Trinity-RFT

cd Trinity-RFTChoose one of the following options:

conda create -n trinity python=3.10

conda activate trinity

pip install -e ".[dev]"

pip install -e ".[flash_attn]"

# if you encounter issues when installing flash-attn, try:

# pip install flash-attn==2.8.1 --no-build-isolationpython3.10 -m venv .venv

source .venv/bin/activate

pip install -e ".[dev]"

pip install -e ".[flash_attn]"

# if you encounter issues when installing flash-attn, try:

# pip install flash-attn==2.8.1 --no-build-isolationuv is a modern Python package installer.

uv sync --extra dev --extra flash_attnIf you just want to use the package without modifying the code:

pip install trinity-rft

pip install flash-attn==2.8.1Or with uv:

uv pip install trinity-rft

uv pip install flash-attn==2.8.1We provide a Docker setup for hassle-free environment configuration.

git clone https://github.com/modelscope/Trinity-RFT

cd Trinity-RFT

# Build the Docker image

## Tip: You can modify the Dockerfile to add mirrors or set API keys

docker build -f scripts/docker/Dockerfile -t trinity-rft:latest .

# Run the container, replacing <path_to_your_data_and_checkpoints> with your actual path

docker run -it \

--gpus all \

--shm-size="64g" \

--rm \

-v $PWD:/workspace \

-v <path_to_your_data_and_checkpoints>:/data \

trinity-rft:latestFor training with Megatron-LM, please refer to Megatron-LM Backend.

Trinity-RFT supports most datasets and models from Huggingface and ModelScope.

Prepare the model in the local directory $MODEL_PATH/{model_name}:

# Using Huggingface

huggingface-cli download {model_name} --local-dir $MODEL_PATH/{model_name}

# Using Modelscope

modelscope download {model_name} --local_dir $MODEL_PATH/{model_name}For more details about model downloading, see Huggingface or ModelScope.

Prepare the dataset in the local directory $DATASET_PATH/{dataset_name}:

# Using Huggingface

huggingface-cli download {dataset_name} --repo-type dataset --local-dir $DATASET_PATH/{dataset_name}

# Using Modelscope

modelscope download --dataset {dataset_name} --local_dir $DATASET_PATH/{dataset_name}For more details about dataset downloading, see Huggingface or ModelScope.

Trinity-RFT provides a web interface for configuring your RFT process.

Note

This is an experimental feature, and we will continue to improve it.

To launch the web interface for minimal configurations, you can run

trinity studio --port 8080Then you can configure your RFT process in the web page and generate a config file. You can save the config file for later use or run it directly as described in the following section.

Advanced users can also edit the config file directly.

We provide example config files in examples.

For complete GUI features, please refer to the monorepo for Trinity-Studio.

Start a ray cluster:

# On master node

ray start --head

# On worker nodes

ray start --address=<master_address>(Optional) You may use Wandb / TensorBoard / MLFlow for better monitoring. Please refer to this documentation for the corresponding configurations. For example, to log in to Wandb:

export WANDB_API_KEY=<your_api_key>

wandb loginFor command-line users, run the RFT process:

trinity run --config <config_path>For example, below is the command for fine-tuning Qwen2.5-1.5B-Instruct on GSM8k with GRPO:

trinity run --config examples/grpo_gsm8k/gsm8k.yamlFor studio users, click "Run" in the web interface.

This project is currently under active development, and we welcome contributions from the community!

See CONTRIBUTING.md for detailed contribution guidelines.

This project is built upon many excellent open-source projects, including:

- verl, FSDP and Megatron-LM for LLM training;

- vLLM for LLM inference;

- Data-Juicer for data processing pipelines;

- AgentScope for agentic workflow;

- Ray for distributed systems;

- we have also drawn inspirations from RL frameworks like OpenRLHF, TRL and ChatLearn;

- ......

@misc{trinity-rft,

title={Trinity-RFT: A General-Purpose and Unified Framework for Reinforcement Fine-Tuning of Large Language Models},

author={Xuchen Pan and Yanxi Chen and Yushuo Chen and Yuchang Sun and Daoyuan Chen and Wenhao Zhang and Yuexiang Xie and Yilun Huang and Yilei Zhang and Dawei Gao and Yaliang Li and Bolin Ding and Jingren Zhou},

year={2025},

eprint={2505.17826},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2505.17826},

}